The conversation around generative AI can become a great bipartisanship effort in our country. It’s an opportunity for the public and private sectors to collaborate on addressing innovation around combatting misinformation.

Every four years, I’m excited to be a part of and witness one of America’s greatest displays of democracy: the presidential election. After months of campaigns and debates with candidates sharing their platforms and plans, citizens head to the polls to cast their votes for the candidate they believe is most suited to lead our country based on the best information they have been given.

But the fairness of America’s 2024 presidential election is at risk because some of the information that Americans are receiving this cycle is questionable. Recent innovations in generative artificial intelligence mean bad actors’ ability to spread misinformation and influence citizens has never been greater.

There is a lot to love about generative AI. As someone who is a technology fanatic, I’m excited to think about the many uses of this advancement, and I consider it to be one of the most revolutionary innovations for society since the creation of social media. But, just like social media, I fear its capabilities once in vindictive hands. Congress and state legislators must act now to protect our democracy from those abusing this powerful tool.

AI has incredible power and potential to misinform and manipulate Americans on our elections, or, even worse, discourage them from voting altogether. Scarier still, AI can be deployed in this way efficiently and cheaply.

We’re already seeing generative AI being mobilized to spread election misinformation in our country. During the New Hampshire primaries, a robocall impersonating President Joe Biden was delivered to thousands of citizens encouraging them to “save your vote” for the November general election, a possible attempt at voter suppression.

Globally, we see AI being used in similar ways. Over 50 countries are holding elections in 2024. Recently, a female politician in Bangladesh was a victim when a generated photo portraying her in a bikini surfaced with the intention of dissuading public support of her in a predominately Muslim society.

Authoritarian regimes including Russia and China understand the threat informed citizens both at home and abroad pose to their own contemptuous agendas, and they will be keen to manipulate our elections this year. Regardless of where the misinformation stems from, the goal is simple: utilize AI as an effective model to further widen the polarization and divisions we’re already seeing.

So what can we do about it? Minnesota recently passed a law banning deepfakes created to influence our elections. A violator can be fined or even imprisoned for partaking in efforts to misinform the public. Similar legislation in other states is promising.

The Minnesota law is a great start, but this issue must be addressed at a congressional level if we are to maintain confidence in our elections. Because generative AI is growing more sophisticated.

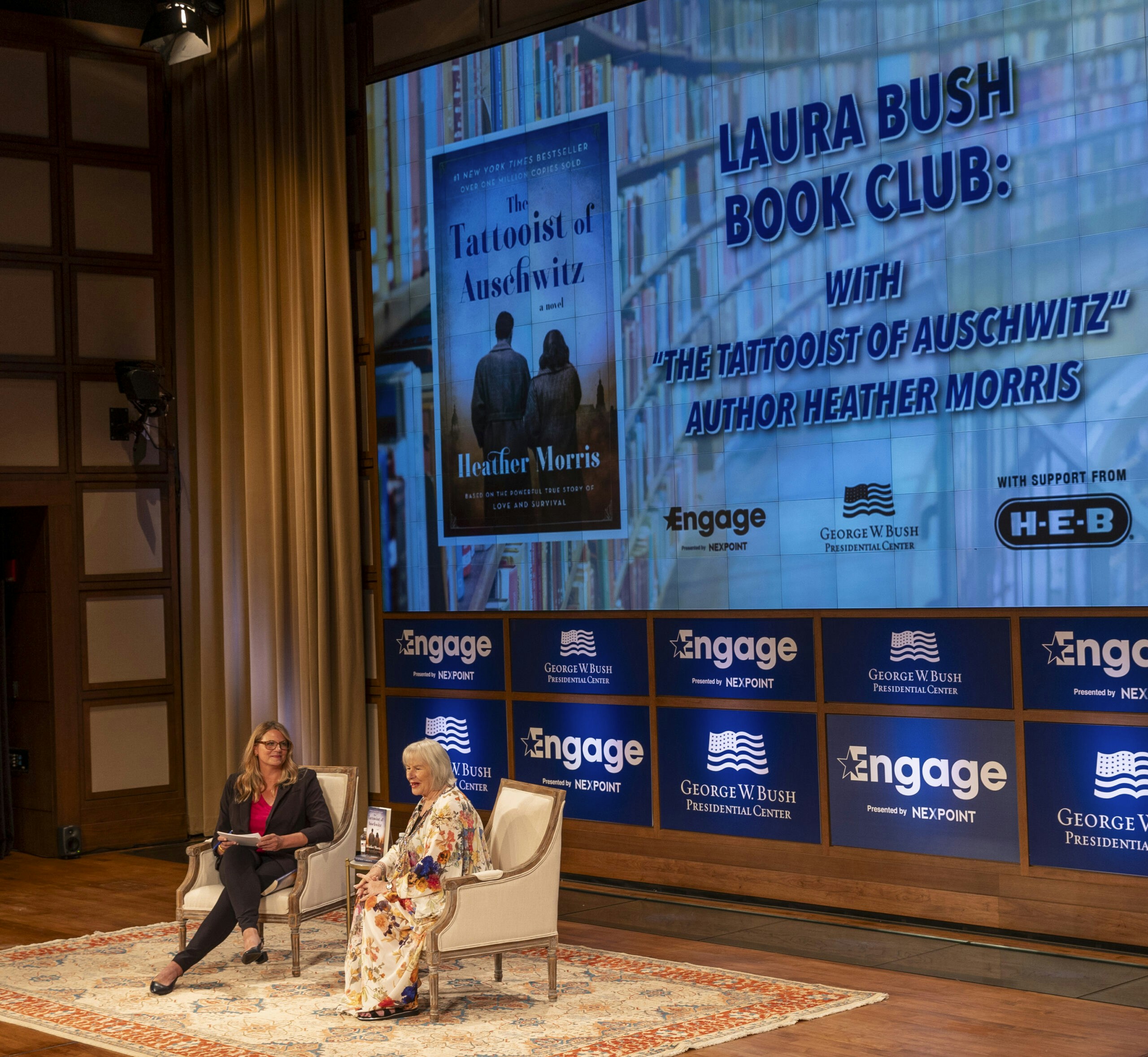

About 95% of Americans believe that misinformation is a problem when it comes to current events, according to a recent Pearson Institute/AP-NORC poll. At the George W. Bush Institute, we are working to strengthen our nation’s democracy by reinforcing important initiatives such as civics education, pluralism, trust, civility, and citizenship.

The conversation around generative AI can become a great bipartisanship effort in our country. It’s an opportunity for the public and private sectors to collaborate on addressing innovation around combatting misinformation. National forums with leading thinkers across sectors are integral to determine effective solutions around identifying what is real and what is generated.

It starts with AI developers and respective corporations, of course, but we shouldn’t leave policy decisions up to them. We’ve already seen the inadequacy of deferring too much to the private sector to make similar decisions on social media. We must not silo the inherent impacts of AI to those who created it.

Ultimately, American leadership on this issue matters. We have an opportunity to lay the groundwork for global efforts to thwart misinformation.

As we head to the polls this year, Americans will need greater support in deciphering fact from fiction than in the past. Being informed and having the ability to be informed is something all of us should care deeply about.

The innovations that generative AI brings are remarkable, but, as with any technology, it can be used in malicious ways. We must act now if we are to maintain a high level of confidence in our 2024 election.